Since Frankenstein, science fiction has worried about the consequences of creating artificial life. Would we make monsters (or robots, or monster-robots) that would destroy their creators? Or can we duplicate whatever it is that makes us human? (That begs the question of whether or not that’s even something to which any self-respecting monster—or machine—should aspire.) My first encounter with the question came in college, when I first saw Ridley Scott’s Blade Runner. The answers there were yes and empathy, with the film portraying replicants as more human than the real humans, rebelling against their creator(s), and also against the corporate system that enslaved them.

Twenty-odd years later, Martha Wells’ Network Effect (and the rest of the Murderbot Diaries) still grapples with the essence of that question, but also reframes it. She throws out the human/machine binary and focuses more closely on how the effects of capitalism, condemned by default in Blade Runner, are entwined with notions of personhood.

As Blade Runner’s crawl-text reveals, the Tyrell corporation intended the replicants to be physically superior to humans, but also to be slave labor—military, industrial, and sexual—on the offworld colonies. This corporate-sponsored slavery is meant to horrify the audience, but at the same time be understood as understandable: after all, the replicants aren’t really people…right? The film goes on to test that hypothesis with the Voight-Kampff test, which measures empathy. Of course the robots will fail.

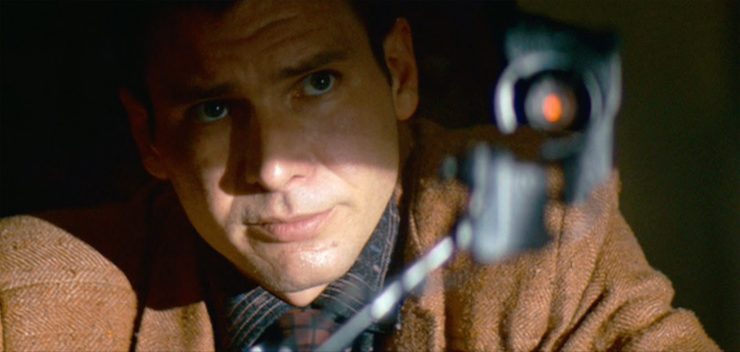

Except they don’t. In the opening scene, human Holden spins a scenario to replicant Leon: test-Leon sees a tortoise in the desert and flips it over. Then test-Leon does not flip the test-tortoise back over, even though its belly is baking in the sun. It needs Leon’s help, Holden murmurs flatly, but Leon’s not giving it. “What do you mean I’m not helping?” Leon demands. He grows visibly and increasingly agitated as the test continues, while Holden, impassive, continues to pose questions (until Leon shoots him). The film is already taking sides: the human doing his job does so mechanically, following his script (dare we say, without empathy?). The replicant, however, is emotionally leaky: nervous, angry, eventually violent. We might feel bad for Holden (ouch), but the camera close-ups on distraught Leon guarantee we empathize with him.

This pattern continues. Leon might have trouble with tortoises, but he loves Zhora. Roy can murder Tyrell, but weeps over Pris and spares Deckard. Deckard, a replicant who believes himself human, chooses to run away with Rachel, another replicant. The film’s actual humans, the members of Tyrell Corporations—Tyrell, JD, the technicians—are shown as unfeeling and mechanical. They have a job to do. They do it. Their inflexibility makes them more robotic than the replicants, and definitely less sympathetic. By the film’s end, we’re firmly on Team Replicant.

Blade Runner’s vision of empathy, though, is limited. The only way we can tell if someone cares about someone else is if there’s romantic interest. Proof of empathy is limited to cis-heterosexualized pairings: Leon and Zhora, Roy and Pris, Rachel and Deckard. Despite all that business about tortoises, what makes us human is…heterosexual monogamy, carved out on the edges of soul-crushing capitalism.

And then along comes Martha Wells with Murderbot. Wells’ future, like Blade Runner’s, starts off in a corporate, capitalist dystopia that strips agency from everyone (human and otherwise). Within the borders of the Corporate Rim, a person’s identity is entirely wedded to their corporate affiliation. Humans are company assets, sometimes indentured across generations to their corporations, their worth dependent on a corporation’s use for them. It’s even worse for the nonhuman sentiences. Murderbot is a SecUnit, a construct composed of cloned human tissue and inorganic material. Its job is to protect its corporate clients from whatever stupidity and danger they may encounter. Good behavior is guaranteed by a governor module, which Murderbot describes as feeling like “being shot by a high-grade energy weapon, only from the inside out.” Murderbot insists, with pride, that SecUnits are superior to human security. They don’t shoot unnecessarily. Their aim is better. They make better financial sense for security than humans…at the same time that they’re also disposable and controlled by force, rather than economics. As in Blade Runner, machine slavery is a good capitalist investment. Human lives might not matter much, but they matter just that little bit more, and you can abuse them (more) openly.

And what about that humanity? Wells’ future gives us a lot more variety: queer, trans, straight, and genders found only in particular colonies; single or married monogamously or, more commonly, married with multiple partners; friends, parents, second-mothers, siblings, daughters, uncles. Murderbot treats this human diversity as unremarkable, bordering on unimportant (it never remarks on its own physical features, and because it thinks sex is gross, never comments on anyone else’s attractiveness). What does surprise it are how socioeconomic alternatives to capitalism affect the humans who live in them. Its clients in All Systems Red and Network Effect hail from Preservation, a non-Rim world founded by survivors of an abandoned, left-for-dead corporate colony. Preservation’s society exists in ideological opposition to the Corporate Rim: communitarian and cooperative, all resources deemed “necessary” provided for free (healthcare, education, food, shelter). To Murderbot’s shock and initial discomfort, these clients treat it like a person. They’re careful of its feelings. They invite it to sit down in the crew seats. They respect its preferences. Preservation’s citizens treat Murderbot like a person, and the corporation citizens treat it like a machine, so the conclusion seems pretty straightforward. Capitalism sucks, and it makes its human citizens suck, too, whereas non-capitalist systems will treat non-humans fairly.

Except they don’t. Not exactly. Although Murderbot’s individual clients regard it as an independent person, Preservation law requires constructs to have human guardians to help them navigate society, ostensibly for the construct’s comfort…but also, by implication, for human comfort as well.. No one is going to hurt the construct, sure, and humans care about its feelings…but they’re still going to exercise some kind of supervisory control, presumably to keep everyone safe. There is a separation of personhood—separate and not quite equal. There might not be a governor module, but there’s not freedom, either.

Buy the Book

Fugitive Telemetry

Then we get our third option: the Pansystem University of Mihira, which we see in the narrative primarily in the person of Perihelion, (or, as Murderbot calls it, Asshole Research Transport. ART, for short). ART, like Murderbot, is an unsanctioned, armed machine intelligence in the Corporate Rim. ART, unlike Murderbot, is not shaped like a human. When it’s not acting as a crewed research vessel, ART goes on intelligence-gathering runs into the Rim, pretending to be a simple cargo bot pilot. ART gives Murderbot a ride between stations, but once it realizes Murderbot is a rogue SecUnit, it offers to surgically alter its body to help it pass as an augmented human. Because of ART’s aid, Murderbot can pass as human on Rim stations and save its non-corporate clients from corporate machinations and villainy.

Thus, altering Murderbot isn’t just illegal, it’s also subversive. Because ART offers Murderbot a choice—to pass as an augmented humans, to become the rogue SecUnit media villain, to sit in a room watching downloaded media—without conditions, ART, and by extension the Pansystem University, demonstrates more than anti-capitalism, working actively against corporate interests and corporate systems by prioritizing individual choice and freedom. It’s not only about undermining corporate interests, but also spreading the power of self-governance…rooted in a conviction all persons are capable and deserving of self-determination. That ethos, more than any potential cisheterosexual romance we see in Blade Runner, demonstrates true empathy.

Both ethos and effect prove contagious. In Network Effect, Murderbot simultaneously asks SecUnit Three to help save its clients while sending Three the code to hack its governor module. Murderbot has no guarantee that Three will choose to hack its governor module at all, much less, in its first act of freedom, help a strange SecUnit’s even stranger human associates. It can only offer Three the same choice ART offered it: agency without conditions. Three accepts, and immediately offers to help rescue Murderbot…as do ART’s newly rescued humans, and Murderbot’s own human friends. Empathy, it seems, connects all people.

The problem of what makes us human is not, and never has been, an inherently biological conflict, but it’s also more than simple socioeconomics. Blade Runner wasn’t wrong that empathy makes us people, and that corporate capitalism is dehumanizing, but it stopped imagining too soon: though the replicants prove themselves better people than real humans, in the end, the capitalist, corporate hellscape removes any real choice for them except doomed rebellion. Murderbot and Network Effect offer us alternatives to capitalism, while at the same time encouraging radical, real self-governance. It is not enough to hack our own governor modules. We have to show other people—from rogue SecUnits to Asshole Research Transports to every variety of human, augmented or otherwise—how to hack theirs, too.

K. Eason is a lecturer at the University of California, Irvine, where she and her composition students tackle important topics such as the zombie apocalypse, the humanity of cyborgs, and whether or not Beowulf is a good guy. Her previous publications include the On the Bones of Gods fantasy duology with 47North, and she has had short fiction published in Cabinet-des-Fées, Jabberwocky 4, Crossed Genres, and Kaleidotrope. When she’s not teaching or writing, Eason picks up new life skills, ranging from martial arts (including a black belt in kung fu!), to Viking sword and shield work, to yoga and knitting. She is the author of The Thorne Chronicles, published by DAW.

I’d disagree with that reading of Blade Runner. I saw it as Leon trying and failing to fake the appropriate human emotional responses – he knows that he needs to show some emotion or Holden will retire him, but he’s no idea how to. Look at his reactions – would any normal human react to a series of weird questions about turtles that way? Bored, amused, mildly irritated, yes – but Leon’s nervous from the start and his responses just go on getting stranger.

Holden, meanwhile, is gradually realising over the course of the interview that Leon isn’t human. There are plenty of reaction shots of Holden looking at the readouts after Leon’s answer that imply something’s wrong and Holden knows it. (Of course the audience doesn’t know what, yet, because the film’s only just started and we have no idea what this test is about.)

But Holden’s in exactly the opposite position to Leon. Leon has to show emotion or risk death. Holden doesn’t dare show emotion, or Leon will realise that Holden has seen through his pretence, in which case Leon will kill him. So Leon has to pretend to be human, and Holden has to pretend to be, well, mechanical.

Sorry, but a robot getting all twitchy over simple questions and shooting the questioner didn’t create empathy for me at all. Leon was a psycho. Poor Holden.

As an autistic woman, I can definitely relate to having the “wrong” emotional responses sometimes, and also to people thinking that means I don’t have any real emotions at all.

As a result, the Voig(h)t-Kampf test scenes are always extra uncomfortable for me, beyond the discomfort everyone watching is meant to feel, because I can’t help but wonder if I myself would fail such a test.

Anyway, I really liked this article. I had my phone reading it out to me, and I couldn’t help but do a silly grin and start wiggling my feet about the part where ART offers mods, or the hacking of the governor module.

So you’ve got me really interested in seeking out this other story. Especially because it shows 3 societal options instead of only 2, as I commonly see in other stories like this. Everything’s (seemingly) got to be a dichotomy. So it’s nice to read that this story doesn’t do that. (Even if “the commune has people’s needs met but is a bit stifling” isn’t the most original type of society.)

I don’t believe Deckard was a replicant, so I’m not too keen on that interpretation of Blade Runner. It’s an interesting take, though.

@1 – Completely agree. In addition, I would disagree with the author’s statement that the technicians were unfeeling and mechanical.

J.F. Sebastien which I believe the author mistakenly lists as JD, is portrayed as a caring and concerned character who helps the replicants out of the shared misfortune of a limited lifespan. His reward is to be murdered by the supposedly “sympathetic” artificial beings for no reason other than being upset at their violence. I would also go so far to say that the eye technician, Chew, was not uncaring and mechanical, just insufficiently developed onscreen to determine motive.

Murderbot is a great series though.

Yeah, arguing that JF Sebastian was “uncaring and mechanical” is kind of a weird statement, but you have to say it if you’re going to argue that every human in Blade Runner is basically a psycho.

Proof of empathy is limited to cis-heterosexualized pairings: Leon and Zhora, Roy and Pris, Rachel and Deckard.

There is no evidence whatsoever that any of the characters listed here are cis. That’s your assumption – and, fair enough, it’s a reasonable assumption that doesn’t disagree with the text – but you can’t then criticise the text for it.

3: very interesting insight! The scene in which Deckard’s asked “Have you ever retired a human by mistake?” comes not much later.

Proof of empathy is limited to cis-heterosexualized pairings: Leon and Zhora, Roy and Pris, Rachel and Deckard.

Empathy is arguably demonstrated by Roy towards Deckard right at the end (rescuing Deckard; “Quite an experience to live in fear, isn’t it?”), and possibly by Gaff towards Deckard (or towards Deckard and Rachel), in allowing them to escape. More generally, empathy is not just “caring about someone else”: you can feel empathy towards someone you don’t love — even towards someone you (also) hate. (That’s why the notional tortoise is part of the test, despite its not being a “cis-heterosexualized pairing”. One could perhaps even argue that by reaching down and saving Deckard’s life when he’s about to fall to his death, Roy is demonstrating his response to the Voight-Kampf tortoise scenario: no, I won’t just let the tortoise bake to death in the sun.)

The film’s actual humans, the members of Tyrell Corporations—Tyrell, JD, the technicians—are shown as unfeeling and mechanical. They have a job to do. They do it. Their inflexibility makes them more robotic than the replicants, and definitely less sympathetic.

This is a strange misreading of the film. Tyrell is self-confident, smug, and arrogant, not “unfeeling and mechanical”. J.F. Sebastian is desperately shy, nervous and awkward, a bit smitten by Pris, and at least partly sympathetic/empathic towards the replicants — I think that’s part of his motivation (maybe combined with fear of Roy and Pris) for helping Roy meet with Tyrell. (That’s certainly not “a job to do”.) And he is a sympathetic character — we see his awkwardness, his loneliness — and his fear when Roy turns on him.

(Do we ever see any “technicians”, working for Tyrell or otherwise?)

(Do we ever see any “technicians”, working for Tyrell or otherwise?)

The eye specialist might count as a technician. But he’s far from unfeeling. He’s fascinated by Roy and then terrified. We don’t see any human Tyrell employees at all; just Tyrell himself, Leon and Rachel.

And JF Sebastian, of course.

ajay @@@@@ 8 & 9

Yes… those are possibilities. I tend to interpret Chew’s plaint that he just “designs eyes” — and the fact that he seems to have his own lab and store(?) located away from the Tyrell Corporation — to mean he’s more than just a “technician”. And, as you point out, he’s hardly “unfeeling and mechanical.”

About Blade Runner and the Voight-Kampf test. There is a big difference between the source material (Do androids…) and the film.There is a lot of things that go around empathy in the novel: the empathy with the suffering Mercer (or Christ’s ordeal), the emotional link with animals (even fake ones for those who cannot the natural ones), and the lack of empathy that characterizes replicants. Exactly as real world psychopaths: about a 1% of the population that lack empathy whatsoever. Replicants are actually the bad guys in the novel, and the test tries to detect that lack of empathy. The test is the equivalent of detecting psychopaths, those who you can meet anywhere, including school, the workplace, and so on.

Then, the film is arguably something different. It goes around how the replicants’s lives are precious, as well as anyone else’s. Deckard shows empathy, Rachel does, and so do Roy and the rest of the runaway slaves. Their situation is denounced, etc.

So, in the film, what does the test detect? Not lack of empathy, but possibly differences in how emotions are lived and expressed. Not much is said. It just detects differences, but, lack of empathy? nope.

Another residue from the book: the appreciation of animals, and artificial animals. It helps establish the environmental debacle that the world of the film has suffered as well as how the state of the technology allows for such developments (with replicant construction being top notch).

@10

The guy who designs eyes… A contractor for Tyrell. They seem to outsource that piece of design

@@.-@ it is interesting. Ridley (the director) firmly believes that Deckard is a replicant. But the script writer Fancher says he wrote it with Deckard being human. I must admit I lean more towards Fancher’s interpretation myself as it strengthens the point that the replicants are very human. Also Deckard being a replicant raises a bunch of logical plot issues as he is very very badly suited for the role.

One of the things that the article ignores, and granted it isn’t emphasizedo in the film, is *why* replicants on Earth are supposed to be “retired”. They had to have killed their owners in order to show up on Earth. It is proof of their lack of empathy and psychopathy that they put their selfish desires before the lives of others. PKD, the author of the book the film is loosely based on, is much less clear on who the villains in this story are, particularly as the replicants are far more like modern humans and very few of us would pass a Voight-Kampf empathy test, neurotypical or not.

The Earth of the book is much more suited to the replicants, also. They are offered as incentive for humans with non-mutated reproductive capacity to emigrate off world – which is extremely difficult and lonely – because the background radiation is rendering on world humanity sterile and stupid (chickenheaded). Humanity on Earth is dying. Humanity off world is miserable.

Replicants may lack humanity’s empathy for “living” things (which are dying on the dying Earth is any event), but they are sort of protective of each other. Rachel (*not* a love interest in the book) takes revenge on Deckard for killing her fellow replicants by throwing his goat, purchased by their bounty money, off the roof of the apartment.

I won’t pretend PKD isn’t a problematic writer, because he really was, but his novels and stories are intense explorations on the nature of personhood, memory and reality.

@3: OMG, you *MUST* read the Murderbot Diaries immediately. I’m a woman on the spectrum too, and they spoke to me like almost no other books have ever done. I understood myself better through the lens of SecUnit. I absolutely think that it’s possible to read SecUnit as autistic (and I do).

A more general comment: one could include asexual in the description of Wells’ sexually open and generous universe, because SecUnit is emphatically ace, noting that even if it had sex-related parts, it didn’t think it would be at all interested in sex (that its pronoun is ‘it’ seems to underline how deeply it dislikes the whole notion of gender, even as a spectrum).

I love that Wells’ world features women in charge of anything important, and it’s interesting — although I haven’t worked out any large academic argument about it, despite being an academic — that this is one of the few futures I’ve ever read in which human’s colonizing of space is the result of our unchecked fecundity, only *without* any accompanying disaster or mass starvation on Earth … almost as if birth and families aren’t automatically to be abhorred or considering an obstacle to be overcome before one can do great things.

“Tor.com members … never have to prove they’re not robots.”

What were we talking about again? <clicks “I’m not a robot” box>